All + Moved all to docker folder. Getting ready to start sharing other ways to deploy helk (terraform & Packer maybe) Compose-files + Basic & Trial Elastic Subscriptions available now and can be automatically managed via the helk_install script ELK Version : 6.3.2 Elasticsearch + Set 4GB for ES_JAVA_OPTS by default allowing the modification of it via docker-compose and calculating half of the host memory if it is not set + Added Entrypoint script and using docker-entrypoint to start ES Logstash + Big Pipeline Update by Nate Guagenti (@neu5ron) ++better cli & file name searching ++”dst_ip_public:true” filter out all rfc1918/non-routable ++Geo ASName ++Identification of 16+ windows IP fields ++Arrayed IPs support ++IPv6&IPv4 differentiation ++removing “-“ values and MORE!!! ++ THANK YOU SO MUCH NATE!!! ++ PR: https://github.com/Cyb3rWard0g/HELK/pull/93 + Added entrypoint script to push new output_templates straight to Elasticsearch per Nate's recommendation + Starting Logstash now with docker-entrypoint + "event_data" is now taken out of winlogbeat logs to allow integration with nxlog (sauce added by Nate Guagenti (@neu5ron) Kibana + Kibana yml file updated to allow a longer time for timeout Nginx: + it handles communications to Kibana and Jupyterhub via port 443 SSL + certificate and key get created at build time + Nate added several settings to improve the way how nginx operates Jupyterhub + Multiple users and mulitple notebooks open at the same time are possible now + Jupytehub now has 3 users hunter1,hunter2.hunter3 and password patterh is <user>P@ssw0rd! + Every notebook created is also JupyterLab + Updated ES-Hadoop 6.3.2 Kafka Update + 1.1.1 Update Spark Master + Brokers + reduce memory for brokers by default to 512m Resources: + Added new images for Wiki |

||

|---|---|---|

| .github/ISSUE_TEMPLATE | ||

| docker | ||

| helk-zeppelin | ||

| resources | ||

| scripts | ||

| winlogbeat | ||

| .gitignore | ||

| LICENSE | ||

| PULL_REQUEST_TEMPLATE.md | ||

| README.md | ||

README.md

HELK [Alpha]

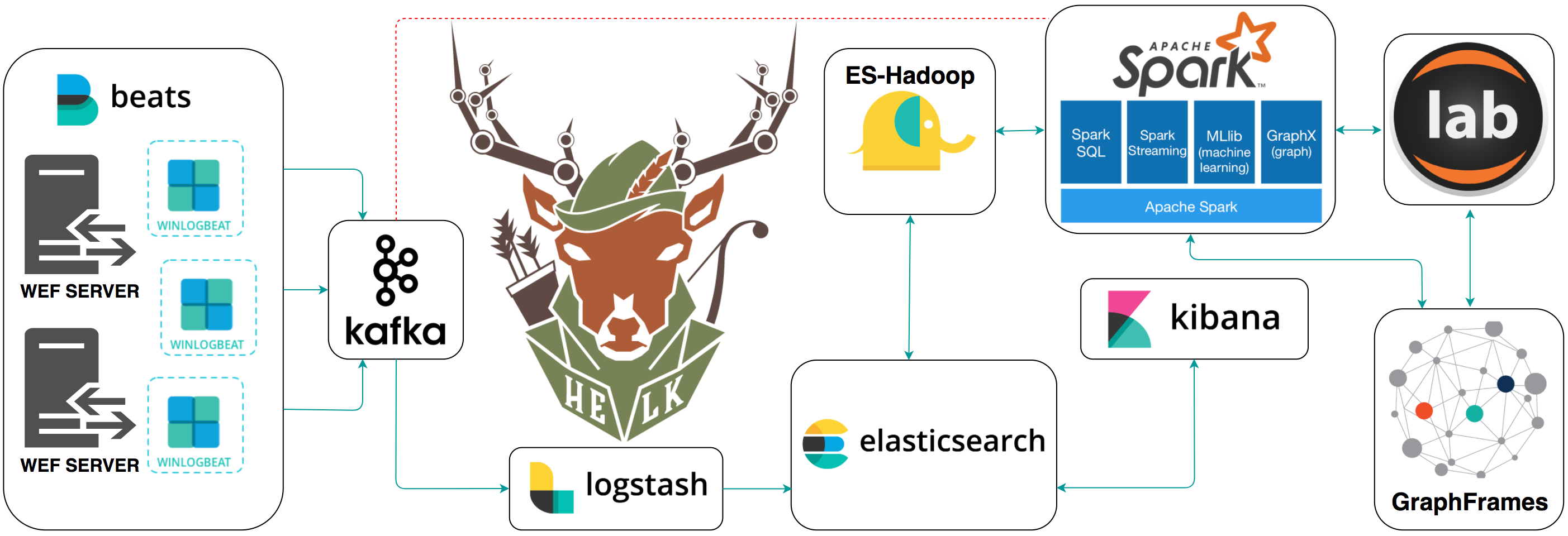

A Hunting ELK (Elasticsearch, Logstash, Kibana) with advanced analytic capabilities.

Goals

- Provide a free hunting platform to the community and share the basics of Threat Hunting.

- Make sense of a large amount of event logs and add more context to suspicious events during hunting.

- Expedite the time it takes to deploy an ELK stack.

- Improve the testing of hunting use cases in an easier and more affordable way.

- Enable Data Science via Apache Spark, GraphFrames & Jupyter Notebooks.

Current Status: Alpha

The project is currently in an alpha stage, which means that the code and the functionality are still changing. We haven't yet tested the system with large data sources and in many scenarios. We invite you to try it and welcome any feedback.

HELK Features

- Kafka: A distributed publish-subscribe messaging system that is designed to be fast, scalable, fault-tolerant, and durable.

- Elasticsearch: A highly scalable open-source full-text search and analytics engine.

- Logstash: A data collection engine with real-time pipelining capabilities.

- Kibana: An open source analytics and visualization platform designed to work with Elasticsearch.

- ES-Hadoop: An open-source, stand-alone, self-contained, small library that allows Hadoop jobs (whether using Map/Reduce or libraries built upon it such as Hive, Pig or Cascading or new upcoming libraries like Apache Spark ) to interact with Elasticsearch.

- Spark: A fast and general-purpose cluster computing system. It provides high-level APIs in Java, Scala, Python and R, and an optimized engine that supports general execution graphs.

- GraphFrames: A package for Apache Spark which provides DataFrame-based Graphs.

- Jupyter Notebook: An open-source web application that allows you to create and share documents that contain live code, equations, visualizations and narrative text.

Resources

- Welcome to HELK! : Enabling Advanced Analytics Capabilities

- Spark

- Spark Standalone Mode

- Setting up a Pentesting.. I mean, a Threat Hunting Lab - Part 5

- An Integrated API for Mixing Graph and Relational Queries

- Graph queries in Spark SQL

- Graphframes Overview

- Elastic Producs

- Elastic Subscriptions

- Elasticsearch Guide

- spujadas elk-docker

- deviantony docker-elk

Getting Started

WIKI

(Docker) Accessing the HELK's Images

By default, the HELK's containers are run in the background (Detached). You can see all your docker containers by running the following command:

sudo docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a97bd895a2b3 cyb3rward0g/helk-spark-worker:2.3.0 "./spark-worker-entr…" About an hour ago Up About an hour 0.0.0.0:8082->8082/tcp helk-spark-worker2

cbb31f688e0a cyb3rward0g/helk-spark-worker:2.3.0 "./spark-worker-entr…" About an hour ago Up About an hour 0.0.0.0:8081->8081/tcp helk-spark-worker

5d58068aa7e3 cyb3rward0g/helk-kafka-broker:1.1.0 "./kafka-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:9092->9092/tcp helk-kafka-broker

bdb303b09878 cyb3rward0g/helk-kafka-broker:1.1.0 "./kafka-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:9093->9093/tcp helk-kafka-broker2

7761d1e43d37 cyb3rward0g/helk-nginx:0.0.2 "./nginx-entrypoint.…" About an hour ago Up About an hour 0.0.0.0:80->80/tcp helk-nginx

ede2a2503030 cyb3rward0g/helk-jupyter:0.32.1 "./jupyter-entrypoin…" About an hour ago Up About an hour 0.0.0.0:4040->4040/tcp, 0.0.0.0:8880->8880/tcp helk-jupyter

ede19510e959 cyb3rward0g/helk-logstash:6.2.4 "/usr/local/bin/dock…" About an hour ago Up About an hour 5044/tcp, 9600/tcp helk-logstash

e92823b24b2d cyb3rward0g/helk-spark-master:2.3.0 "./spark-master-entr…" About an hour ago Up About an hour 0.0.0.0:7077->7077/tcp, 0.0.0.0:8080->8080/tcp helk-spark-master

6125921b310d cyb3rward0g/helk-kibana:6.2.4 "./kibana-entrypoint…" About an hour ago Up About an hour 5601/tcp helk-kibana

4321d609ae07 cyb3rward0g/helk-zookeeper:3.4.10 "./zookeeper-entrypo…" About an hour ago Up About an hour 2888/tcp, 0.0.0.0:2181->2181/tcp, 3888/tcp helk-zookeeper

9cbca145fb3e cyb3rward0g/helk-elasticsearch:6.2.4 "/usr/local/bin/dock…" About an hour ago Up About an hour 9200/tcp, 9300/tcp helk-elasticsearch

Then, you will just have to pick which container you want to access and run the following following commands:

sudo docker exec -ti <image-name> bash

root@ede2a2503030:/opt/helk/scripts#

Author

- Roberto Rodriguez @Cyb3rWard0g @THE_HELK

Contributors

- Jose Luis Rodriguez @Cyb3rPandaH

- Robby Winchester @robwinchester3

- Jared Atkinson @jaredatkinson

- Nate Guagenti @neu5ron

- Jordan Potti @ok_bye_now

- Lee Christensen @tifkin_

Contributing

There are a few things that I would like to accomplish with the HELK as shown in the To-Do list below. I would love to make the HELK a stable build for everyone in the community. If you are interested on making this build a more robust one and adding some cool features to it, PLEASE feel free to submit a pull request. #SharingIsCaring

License: GPL-3.0

HELK's GNU General Public License

TO-Do

- Upload basic Kibana Dashboards

- Integrate Spark & Graphframes

- Add Jupyter Notebook on the top of Spark

- Kafka Integration

- Default X-Pack Basic - Free License Build for ELKStack

- Spark Standalone Cluster Manager integration

- Apache Arrow Integration for Pandas Dataframes

- Zepplin Notebook Docker option

- KSQL Client & Server Deployment (Waiting for v5.0)

- Kubernetes Cluster Migration

- OSQuery Data Ingestion

- Create Jupyter Notebooks showing how to use Spark & GraphFrames

- MITRE ATT&CK mapping to logs or dashboards

- Cypher for Apache Spark Integration (Might have to switch from Jupyter to Zeppelin Notebook)

- Somehow integrate neo4j spark connectors with build

- Nxlog parsers (Logstash Filters)

- Add more network data sources (i.e Bro)

- Research & integrate spark structured direct streaming

More coming soon...